Major investments from tech giants like AWS, Google, and Microsoft with government support in Southeast Asia, including Thailand with a planned 300billon baht investment, aim to drive adoption of on-demand IT resources and create opportunities for local communities and businesses in the region. With these companies establishing data centers and cloud infrastructure locally, businesses looking to run workloads on the cloud will benefit from improved service quality due to proximity, enhanced local technical support from growing technical communities, and incentives from up to 15% reduction in operating cost. Whether your project is large or small, these are key considerations your team may experience when moving into most expanding regions, including Thailand.

The post will include recommendations on the importance of evaluating cloud features, establishment of guardrails on the cloud, and setting expectations based on what simple or complex projects may experience.

The opinions expressed in this article are my own and are based on my experience as a cloud engineer. Moving into a new cloud region involves more than just technical considerations, which appears to be the primary experience shared here. AI was utilized in restructuring these sentences and fixing grammatical errors.

Updates

- March 1, 2025: Added context in example for database in the assessment of regional capabilities.

- March 16, 2025: Improved wording on introduction, adding additional takeaways.

Assess Regional Capabilities: Beyond the Datasheet

When exploring how to leverage the heavy-lifting services provided by cloud providers for our projects, it’s important to understand their current offerings in detail. These services range from basic infrastructure like virtual machines and storage to more advanced capabilities such as pre-tuned machine learning models. Each region may offer different levels of service maturity, making a thorough assessment critical before committing to a specific cloud region.

Understanding your use cases is crucial when reviewing specifications provided by cloud providers to evaluate and later test real-world capabilities of the region.

Consider a project requiring reduced user latency, typically measured by time to first byte between your workload and the user. While choosing the closest available location seems logical, this approach isn’t always optimal. Your workload’s architecture plays a crucial role—particularly if components are distributed across networks rather than functioning as a monolithic application. In distributed systems, selecting a region solely based on proximity might actually increase overall latency if that region lacks certain technical capabilities, forcing additional backend processing or cross-region communication. Therefore, when adopting cloud services in a specific region, it’s essential to evaluate both the capabilities and availability of required services through the following systematic process.

Cloud services provide significant undifferentiated heavy lifting for most workloads, but one size may not fit all. Mature projects with dependencies spanning across various services, especially between regions or those relating to network requirements, need extra consideration. The services defined here include both those directly provided by cloud providers and third-party integrations. These sophisticated projects demand specific sets of features from services, and evaluation beyond their availability and what’s provided in the service documentation is essential.

For smaller projects, limited feature sets can lead to slower time to market due to the need to implement workarounds that substitute for missing features, and they require additional operational overhead in maintaining solutions unavailable in the new region. With these considerations in mind, the offerings from the cloud are generally IT commodities, and the core functionality of the services typically provides the required functionality. Hence, while businesses can leverage provider service availability pages to verify features in the first few days of a region launch, it’s important to thoroughly validate specific feature subsets if needed, even if the provider indicates general availability. Simply put, the availability of a service in its first days could be limited to its core functionalities.

An example within the database realm: availability doesn’t imply all supporting features are present. These features could include resiliency features like having a replica unit for the purpose of improved availability or security features like authentication protocol support. It’s important to identify whether a feature is nice to have or need to have, whether having 98% versus 90% availability matters for your business, or if specific security controls have been invested around certain protocols. These early limitations may be due to dependencies on other services that may not be available yet in the new region. For critical use cases, acknowledge the need to identify, experiment with, and evaluate whether not having certain features will make it practical to invest in a workaround.

For complex services, planning is required to ensure the availability of all required service features provide in the cloud, let alone third-party integrations in the region. If availability is not there, additional networking will be required to connect these components together. Oh yes, networking does exist in the cloud; these services need to communicate with other microservices within the region or external entities across regions. This makes the evaluation of data transfer costs and network performance metrics an important factor for migration.

Establish Your Guardrails: Balancing Innovation with Control

Establishing guardrails should be a foundational step before experimentation; consider practicing governance as an enabler of safe innovation rather than a barrier to progress.

After understanding the requirements and potential use cases for the new region, implementing safety nets for cost and security is encouraged. The absolute worst-case scenarios may vary by business. For instance, if you’re a data provider, having data exfiltrated or affected by ransomware would be a significant concern, while smaller projects typically face misconfiguration risks and operational monitoring gaps.

Guardrail is something that prevents you from an foreseeable and avoidable event. In the cloud environment, these protective boundaries enable teams to innovate confidently while minimizing risk exposure.

Cloud’s flexible “pay-as-you-go” pricing model billed by unit of time requires similarly adaptive cost management strategies to balance with experimentation with financial control. Consider establishing a comprehensive cost allocation strategy through:

- Budgeting (monitor by resource type usage or services)

- Tagging of cloud resources — these enable,

- Cost categorization for more complex projects and provide granularity for better cost anomaly detection

Cloud providers typically offer free tiers for experimentation, but establishing pre-defined budgets (like a “Zero-Spend Budget”) helps maintain usage within acceptable limits. For projects within existing organizations, align with organizational policies that may already be inherited from parent entities, including cost categories and allocation tags. Additionally, tags can be used as automation tags to help acheive elasticity goals.

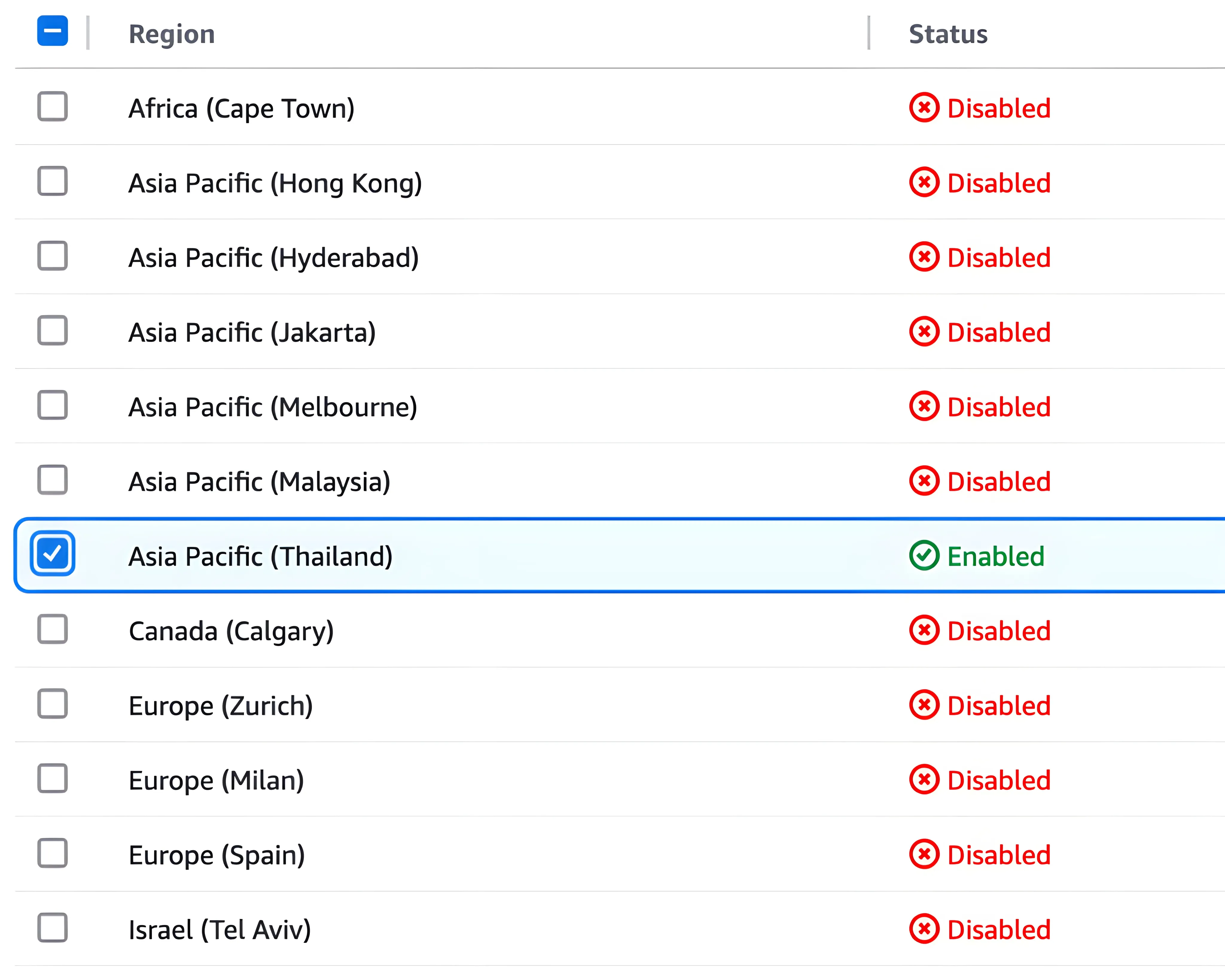

Larger organizations benefit from centralized controls that enforce cost and security policies across the organization. These controls cascade from root to child resources, preventing non-compliant actions before they occur. This approach may include region-specific opt-in requirements—a practice also supported by cloud providers themselves as an additional protective measure.

Common security guardrails include:

- Control policies preventing accidental public exposure of protected data

- Policy-as-code validators for undesirable infrastructure changes and usage patterns

Cost guardrails might restrict available regions, limit service offerings, and control specific high-cost configurations.

Establishing guardrails requires experimentation itself, where can also specify them as detective control — allowing the behavior and flag it for later consideration. Let’s approach it as a journey and ensure a level of operational readiness by consistent reviewing your contact information and alternative contacts for relevant teams, and act upon notifications about workload changes that might require your attention.

By focusing on detection and prevention rather than just restriction, guardrails can facilitate innovation while maintaining appropriate controls—allowing teams to move quickly with confidence in unfamiliar cloud regions.

Take the Leap: Deploy Your First Service

With guardrails established and capabilities tested, it’s time to deploy the service into the region. If you’re choosing the cloud, you’re likely choosing repeatability, and hence deployments may benefit from Infrastructure as Code (IaC) to ensure consistency and repeatability. Tools like Hashicorp Terraform, Amazon CloudFormation, or Azure Resource Manager (ARM) templates document your infrastructure decisions while facilitating future expansions or migrations to other regions.

If complex services are not ready, try a simpler workload to understand the environment and learn the benefits achieved from operating in the new region, whether it’s a static website or internal tool—to validate performance, connectivity, and cost assumptions without risking business-critical operations. This approach provides valuable insights into the region while minimizing risk.

Moving to these new regions is more than just a technical shift—it’s pushing us to rethink modern technology implementation in ways that address regional needs and opportunities.

In my opinion, the arrival of cloud regions across Southeast Asia creates opportunities for businesses to leverage enterprise-grade computing resources with improved local user experience. There’s also room for growing local cloud expertise to contribute to the global technical communities, and it opens up possibilities in understanding how modern technology stacks that being influenced through offerings in the cloud, like artificial intelligence, might be in local contexts.

What are your thoughts on the regional expansion of cloud services happening in Southeast Asia?

Comments

I'm looking for testers for a new commenting system! Interested? E-mail me here with subject of ‘Comment System Tester’.