Large language models (LLMs) have absolutely transformed how we live our lives, from providing conversational companions to easily generating concepts, work products, actions, and inspiration. Through multiple conversations with colleagues over the past few months, it’s clear that AI is here to stay, and what’s next on my mind is automation. How can someone leverage LLMs to coordinate routine tasks and explore new opportunities with reduced investment and, consequently, lower experimentation risks?

| Contents |

|---|

| Encouragement |

| Routine Tasks Being Automated Today |

| Experimentation |

| Effort & Cost |

| Resources & References |

| Reflection |

Encouragement

Technologies are more accessible and easier to adopt than ever, since the invention of the Internet, capable smart devices, and now the resources available from Big Tech’s effort to democratize tech like AI as the balancer of knowledge as essential commodities, with no significant upfront costs. Experimentation with leveraging tech in their profession should be a norm. We started to see AI, especially LLMs, take over the programming world, which I have fully embraced into my daily scripting and PoC integration work. In the later half of 2025, I am beginning to notice the mentioning of LLM tech in financial industries for analysis where ML, a subset of AI, would traditionally be used but with a larger adoption barrier due to the specifics in implementation compared to the generally trained LLM.

Routine Tasks Being Automated Today

A broad range of routine tasks in software development, human resources, and consulting are now being automated with Large Language Models (LLMs). LLM users evaluate these use cases based on their model selections, considering the level of knowledge, problem-solving, and reasoning capabilities tested through supporting benchmarks that align with their specific tasks. Most users have experience with general-purpose LLMs, such as GPT-4o, Claude 3/4, Gemini 2.5 Pro, and Llama 4, as well as domain-specific LLMs like BloombergGPT for finance, Med-PaLM for healthcare, or BioBERT for biomedicine, which are fine-tuned for industry needs and deliver higher accuracy and context sensitivity for specialized tasks.

Simply put, if we have established linguistic patterns for a task, LLMs should perform accurately in those areas. While I am not an industry expert in any of these fields, I am convinced that everyone can get creative about using LLMs for routine tasks. This is because we have created extensive data on the processes required to perform these tasks through digital communication with others, and there are typically recognizable patterns of what good performance looks like. These patterns are now being used to train and fine-tune task-specific LLMs.

Task-specific models are fine-tuned for narrow areas using curated, labeled data and supervised learning, achieving higher domain accuracy. General models use broader, unlabeled datasets for self-supervised training, delivering flexibility across diverse work but with lower peak accuracy in specialized tasks. Organizations often combine both approaches for optimal results: general models for flexibility and rapid deployment, and task-specific models for precision, compliance, and reliability in high-stakes settings.

Experimentation

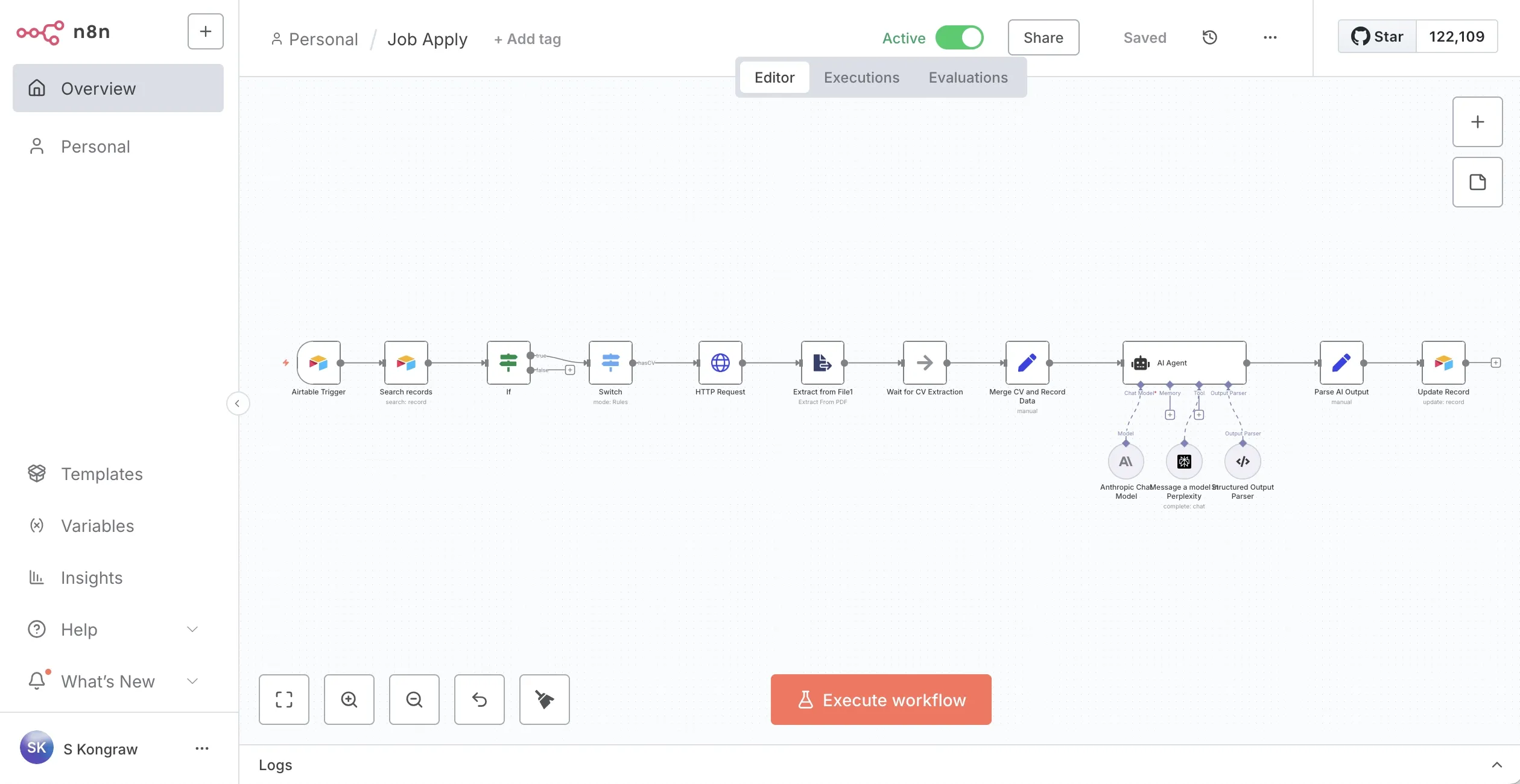

I highly encourage you to skip my experimentation and start your own. I have been experimenting with LLMs for a while now, and I have found that the best way to learn is by doing. The following is a proof-of-concept (PoC) that I have created to automate a routine task using LLMs and automation platforms.

Resources & References

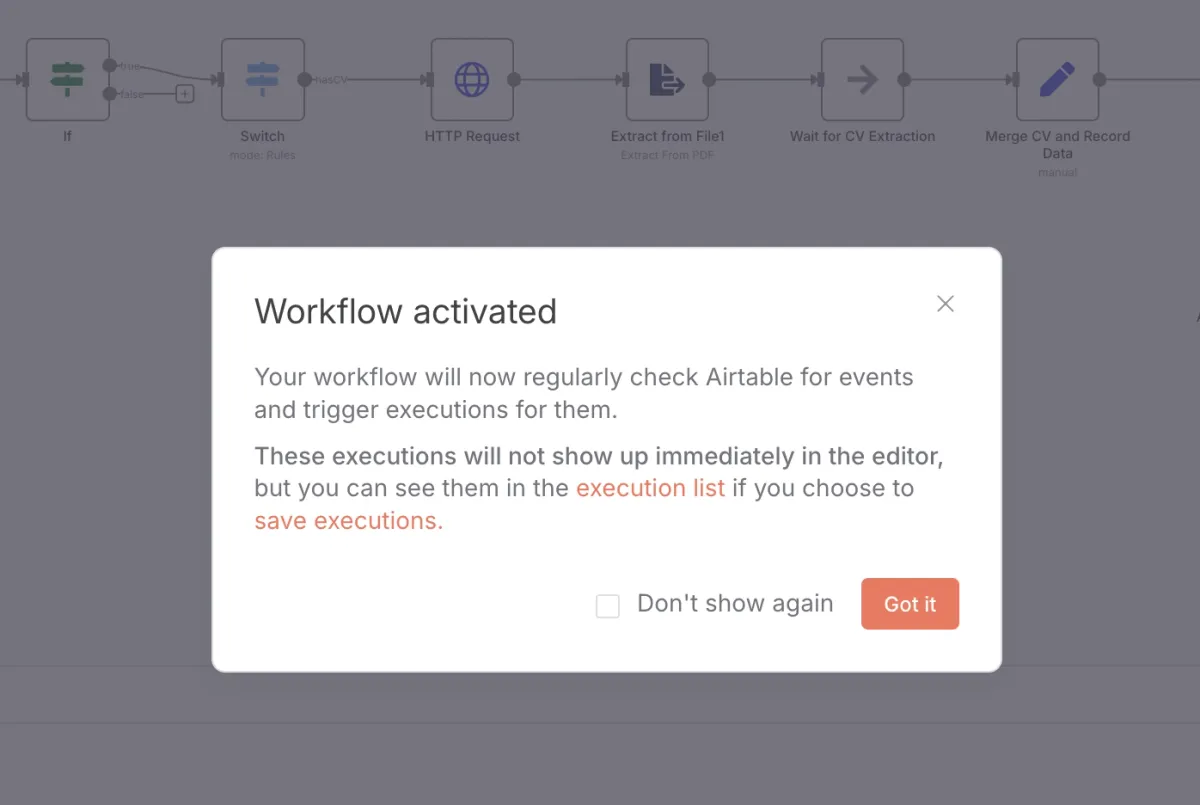

This is the only section that you would need to get started with your own experimentation on an automation platform called n8n. It is a free and open-source platform that allows you to create workflows by connecting different services and applications. You can use it to automate tasks, integrate different systems, and create custom workflows.

For the self-hosted option, I recommend the following resources:

- How I Secured My n8n Instance with Cloudflare Zero Trust (No Open Ports, No Public IP) further edits to come - This article explains how you can use your own personal computer to host n8n and secure it with Cloudflare Zero Trust, so you can access it from anywhere without exposing it to the public internet.

For the cloud-based option, I recommend the following resources:

- n8n Cloud - This is the official n8n cloud service that provides a fully managed instance of n8n and explains the pricing model.

When creating your workflows, you can use the following resources to help you get started:

- n8n Documentation on built-in nodes - This documentation explains what a node is, and which ones are built into n8n. Within each node, you can find the details of how to configure them, provide credentials, and what parameters are available. A node to try out is the built-in AirTable node due to its simplicity and ease of use.

- n8n Documentation for LLMs, GitHub Synaptiv-AI/awesome-n8n - This explains how your LLMs, including ChatGPT, can create workflows in n8n based on a properly formatted llms.txt, reducing the need to manually configure each node. From experience, you will import the file into n8n and may have to tweak it a bit in your n8n editor to get it to work as expected, but it is a great starting point.

Effort & Cost

Below are my estimated efforts and costs for a proof-of-concept (PoC) focused on automating a routine task using LLMs and automation platforms. This PoC aims to demonstrate the minimum effort and cost required, based on my own experimentation.

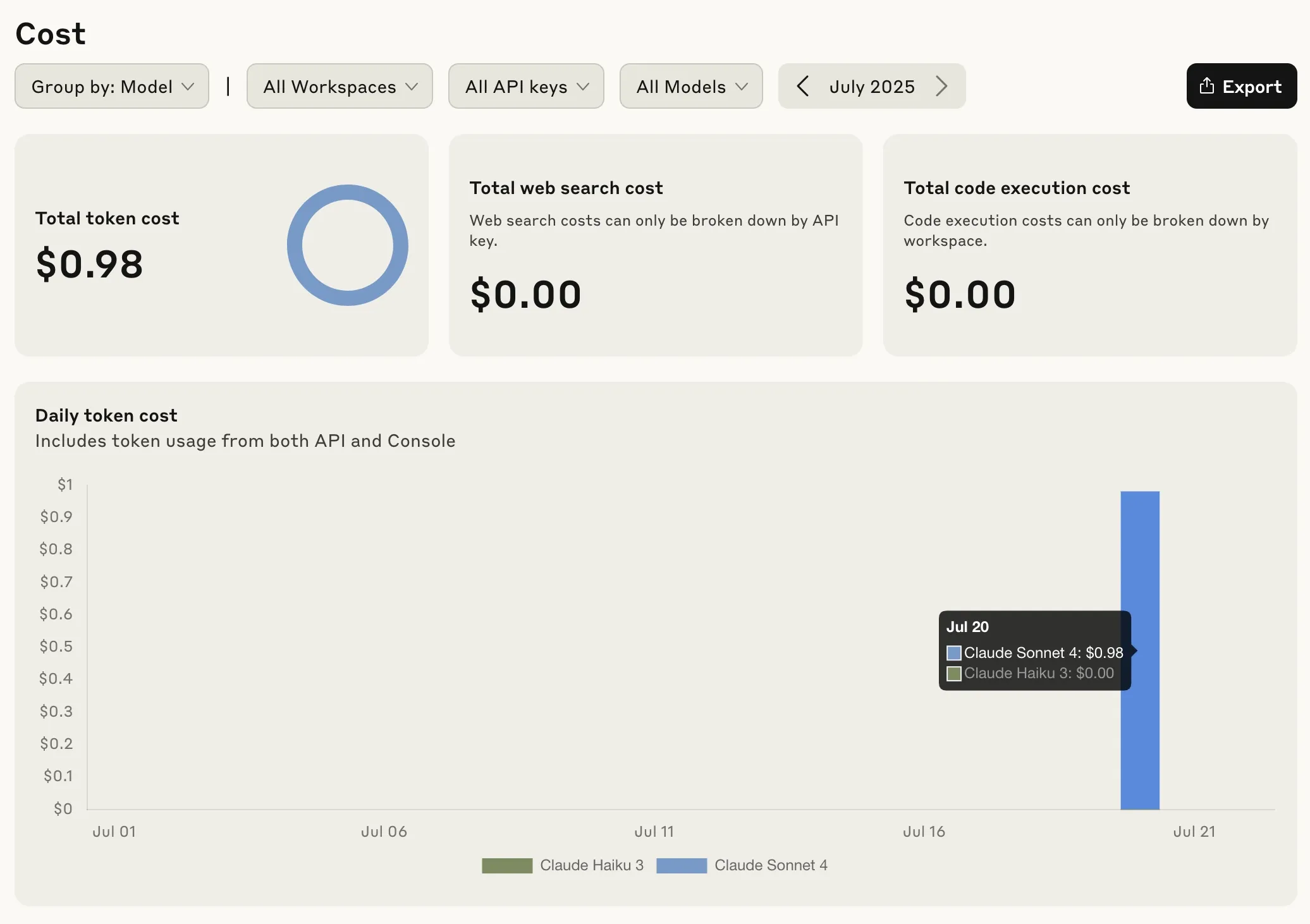

The cost breakdown above shows Sonnet 4 usage from Anthropic, which played an essential role in orchestrating various tools and integrations.

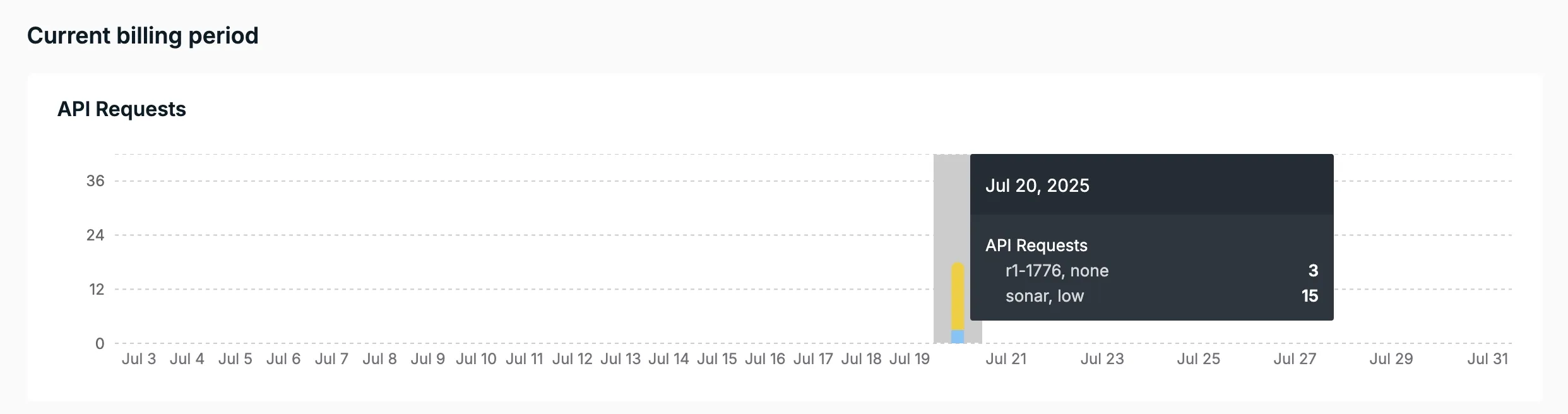

The following also displays Sonar usage from Perplexity, which was used by Sonnet 4 as a tool for web research.

The above cost breakdown is Sonar usage from Perplexity, Sonnet 4 uses it as a tool to research data from the web.

| Action item | Actual hours |

|---|---|

| Design prompts and workflow | 1-2 hours |

| Setup automation platform | 1 hour (once) |

| Prepare integrations and credentials | 30 minutes |

| Drag-and-drop the nodes into workflow | 4 hours (could use chatbots) |

| Evaluate outputs | 1 hour |

| Total | Roughly a day |

The amount of effort may vary depending on the complexity of the task, the number of integrations, and the level of customization required. Over time, as you become more familiar with the tools and platforms, the time required for setup and configuration will decrease significantly.

| Biller entity | Actual cost | Description |

|---|---|---|

| Perplexity | 0.11 USD | Sonar PoC requires minimum top up of $5 between Jul 3, 2025 - Aug 1, 2025 |

| Anthropic | 0.98 USD | Claude Sonnet 4 PoC for 237k total tokens used |

| n8n | - | Self-hosted n8n automation platform; managed deployment at less than 24 euros/mo. |

| Total | 1.09 USD | - |

The primary cost driver is the LLM usage for orchestrating interactions across tools and integrations. The cost of LLMs can vary widely depending on the model, provider, and usage patterns. For instance, advanced models like GPT-4o or Claude Sonnet 4 may cost more than smaller or less powerful models.

The expense of using automation platforms such as n8n also depends on the number of workflows, the extent of integrations, and the frequency of use. Opting for a self-hosted solution can reduce platform costs but may require more initial effort for setup and ongoing maintenance.

Additionally, local LLMs are becoming viable on increasingly powerful personal devices, with options like Llama 4 and Mistral 7B, which can be run on local machines, potentially reducing costs further. Self-hosting requires a level of operational overhead to maintain reliability and security, with an upfront investment in time and resources.

Reflection

Reflecting on the time before the introduction of LLMs, I remember how workflow automation relied heavily on complex switch flows, which often made managing conditions challenging and cumbersome. Now, LLMs significantly simplify these complexities (behind their own layer of complexities, eh?!), allowing us to build workflows that are not only meaningful but also easy to understand and maintain—though their operation comes with token costs tied to compute power.

While the temptation to over-optimize is a familiar engineering dilemma, I recognize the need to be thoughtful about how much time I invest upfront in building a solid automation for valuable routines. Whether you’re passionate about optimization or prefer to delegate it, you’ll ultimately benefit from greater efficiency. Importantly, you don’t need to be an expert to begin leveraging LLM-powered automation in your daily or professional activities.

With the ecosystem rapidly expanding, costs falling, and abundant support resources available, there’s never been a better time to start. Begin with small experiments—you’ll quickly discover how productivity compounds as you identify the best LLM tools for your needs. The biggest hurdle is simply getting started; once you automate your first workflow, new opportunities for further automation will reveal themselves more easily. If you felt like periodically doing the same thing over and over again, this is your call to try out LLMs and automation platforms to see how they can help you streamline your routine tasks.

Comments

I'm looking for testers for a new commenting system! Interested? E-mail me here with subject of ‘Comment System Tester’.